Security versus privacy is a question that has become incredibly common to ask in our country following the events of 9/11 and other terrorist attacks around the world. Now, many are raising serious questions about how far the government can reach into our personal data. If you have an iPhone, this applies to you.

In the wake of the San Bernardino shootings in December of 2015, where a husband and wife couple shot up a community facility in what many people believed was an act of terrorism, the FBI asked Apple to help them recover data from the iPhone of Syed Farook, one of the two shooters.

The government believed Farook and his wife Tashfeen Malik had been radicalized by outside forces and needed proof. But they could not unlock Farook’s iPhone, and turned to Apple for help.

In a letter addressed to everyone who uses the company’s technology, Chairman Tim Cook released a public letter on Apple’s website, talking about the request from the FBI.

While it sounds like much ado about absolutely nothing, the government was asking for an unprecedented amount of access. I cannot do this justice; so I’ll turn the column over to Cook himself, addressing the FBI’s request.

“We have great respect for the professionals at the FBI, and we believe their intentions are good. Up to this point, we have done everything that is both within our power and within the law to help them. But now the U.S. government has asked us for something we simply do not have, and something we consider too dangerous to create. They have asked us to build a backdoor to the iPhone.”

Simply put, the FBI is asking for an update to iOS, the operating system that all Apple products use. That update would be installed and would allow the FBI to selectively recover data and files from any iPhone seized during an investigation. I do not want to bore anyone, so for simplicity’s sake, encryption works like this: when you use encryption, the data is scrambled into unreadable code and stored in a secure digital holding place. If someone were to try and read it, it would appear as a garbled and unreadable code. As soon as the person holding the relevant information calls it up, the code is unscrambled and can be read by the phone.

There are two big problems with what the government wants to do. One is that once this piece of software is put into the operating system, anyone who can access it would instantly have the power to unlock and collect data from any iPhone in the world. While it would be just the government using it for now, other less savory players could take advantage of this. Nothing is truly safe from hacking and data theft on the Internet. After all, everyone thought the infidelity site Ashley Madison was foolproof, and look what happened to it.

The second problem is that the government would have unprecedented surveillance access to your iPhone. I heard a fantastic metaphor for this situation the other day, and I’d like to share it with you now.

Let’s say you own a condo complex. While you own it, a terrible crime takes place in one of the buildings. The police come and ask you to let them in to examine the crime scene. At this point it makes perfect sense to comply. But what happens if the police ask you for a skeleton key that would allow them to get into every building in the condo complex, should the need arise?

What happens if this key falls into the hands of an unscrupulous cop or criminal? Suddenly, that person would have access to everyone’s personal belongings. This is the biggest reason why people are opposing it. We already had a taste of what near unlimited government surveillance looks like during the NSA scandal, and not many of us liked what we saw.

It boils down to this: we are living in a world where technology is used for both creative and destructive purposes like never before. We as a nation must decide what the perfect balance is for us between liberty and security, and I for one applaud Apple for making efforts to keep this balancing act even.

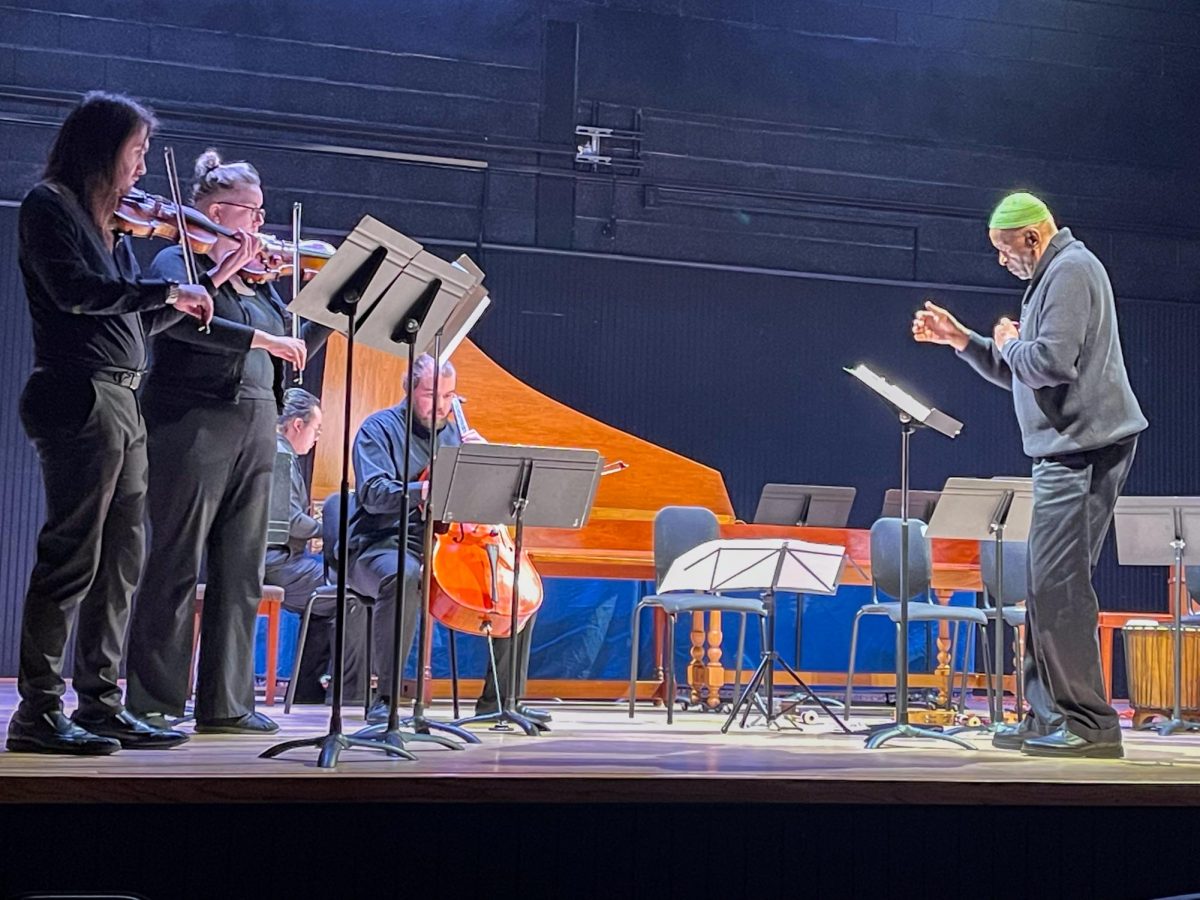

!["Working with [Dr. Lynch] is always a learning experience for me. She is a treasure,” said Thomas. - Staff Writer / Kacie Scibilia](https://thewhitonline.com/wp-content/uploads/2025/04/choir-1-1200x694.jpg)