With the way artificial intelligence (AI) continues to work itself into people’s day-to-day lives, it makes you wonder if the people in charge of AI companies have ever been to the movies.

Recently, one particular AI program has made national headlines by not only passing the United States Medical Licensing Exam and the Bar, but also the effect it is having on schools when it comes to college students potentially using it to cheat.

Chat Generative Pre-Trained Transformer (ChatGPT) is a chatbot developed by Open AI that generates uncanny, human-like responses to anything it can understand and had 1 million users only five days into its existence.

With the dilemma of AI in school being relatively new, those in academia are still learning how to best deal with the ramifications it’s going to have on the educational landscape.

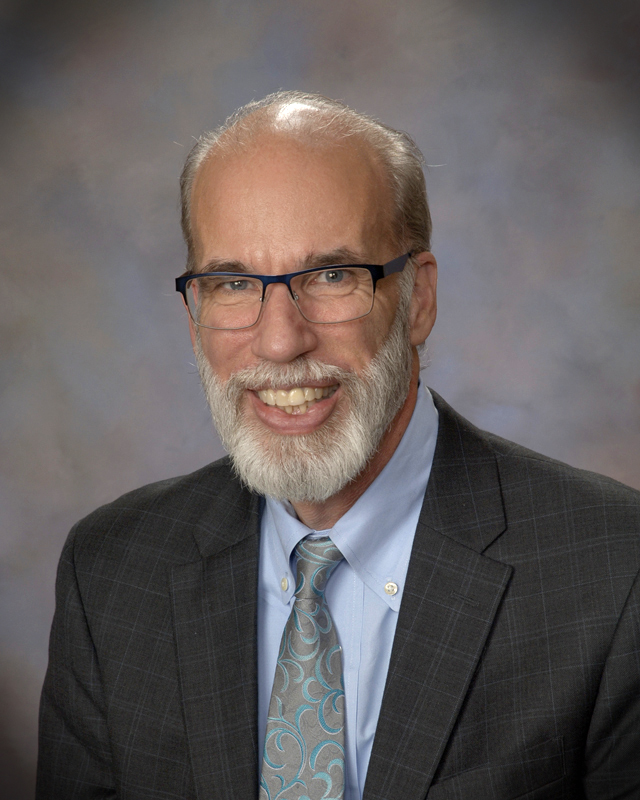

The Ric Edelman College of Communication & Creative Arts Dean , Sanford Tweedie, invites the idea of technology changing the way we approach education.

“We should have known this was coming with artificial intelligence right? I mean, it’s a very sophisticated chatbot but it changes everything,” said Tweedie. “It shifts the way we have to approach education. We have to ask, ‘Why are we teaching what we’re teaching and why we teach it in the way we’re teaching.’ So to me, this is incredibly exciting.”

While the idea excites him, decisions based on how to implement AI programs into classes is going to be a group project rather than an independent verdict.

“We’re not sure yet. We’re going to have a forum in the near future to have people discuss this. I think before we can figure out what we’re going to do with it, people have to understand what it is. If you don’t know it and you just started playing with it, you got a big learning curve. But to me, it’s clear prohibiting its use is not possible,” said Tweedie.

The forums that Tweedie mentions will be made up of students and professors and will explore the differing effects on each college in the university.

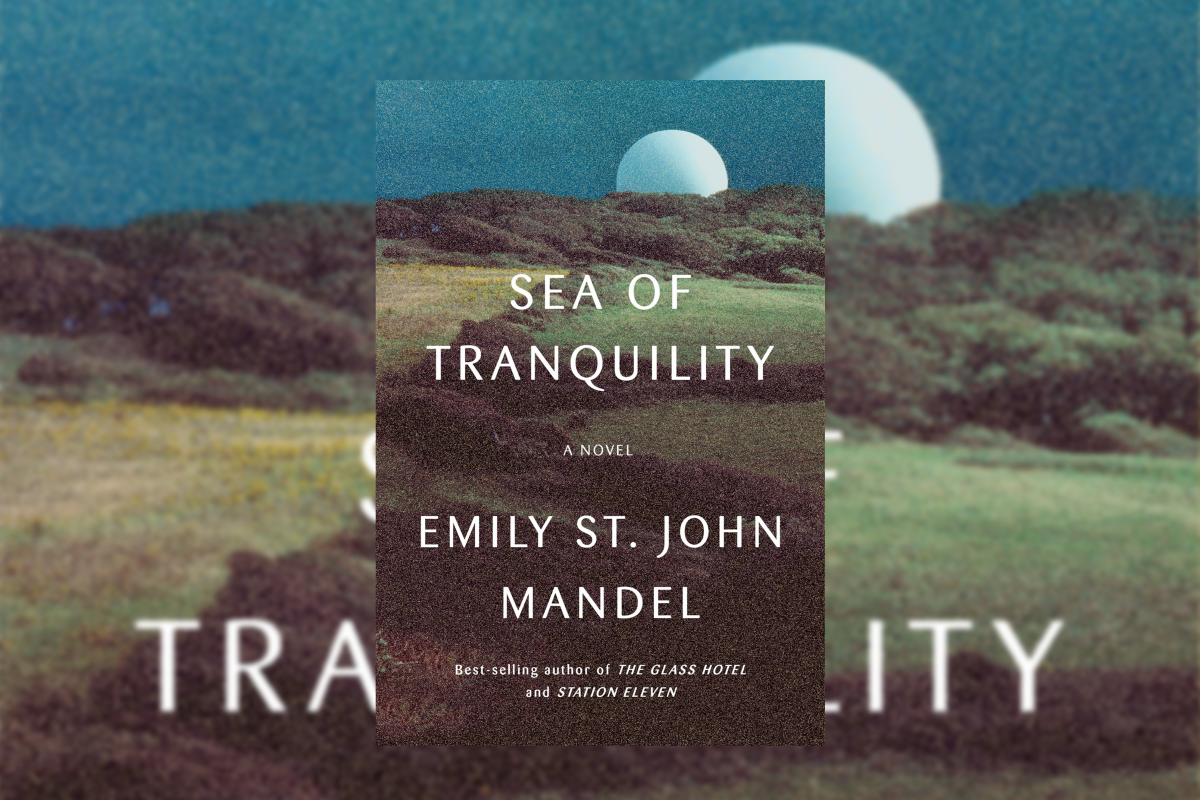

Another open AI invention that has made its way into education is DALL-E 2, which creates realistic images and art from a depiction in natural languages.

While ChatGPT is more helpful to students whose main objective is writing, DALL-E 2 provides art students the ability to instantly create images that can then be used.

Professor Elizabeth Shores teaches digital media and techniques and has students use DALL-E 2 for some of their assignments.

Shores not only had students use AI in class but also had discussions with their students on how they personally feel about these programs that could potentially steal jobs from them in the future.

One of their students, Audrey Johnson, a sophomore art major with a specialization in graphic design, isn’t as worried about AI image generators taking away jobs as others.

“Personally, I don’t think any of the AI art programs will last that long because there is the other concern about stolen images and artwork being taken and being used in the programs,” said Johnson.

That is the other glaring ethical concern regarding the use of AI art generators, where and who is the program taking from?

Each art generator functions differently, but for the most part, all take from existing artwork that ranges from well-known artists and artists who are struggling to get by.

“These artificial intelligence-assistance image generation programs essentially are being taught through algorithms to scrape content from the internet, to be essentially trained to look at different kinds of images. And those images were created by artists. So the issue becomes, is it okay to be sourcing these images from other people,” said Shores.

While they don’t buy into the alarmist idea that AI will replace the jobs of artists as a whole and has a very nuanced opinion on the matter, conversations held in digital media and techniques reflect potential concerns those less familiar with these programs may have.

Shores isn’t the only professor who’s found a way of incorporating AI as a tool in their classroom. Amanda Almon, the chair of biomedical art not only has her students use AI in her 3D modeling class but also has a U.S. Army and Defense grant with the college of engineering using AI in virtual reality.

When it comes to students using the programs for class assignments, Almon requires them to explain how they manipulate the generated art to become something more than just a product of the program.

“If you use it within the process, and you’re transparent about it, and show me how you did sketches with it, or you did concept art with it, and then you actually use it within your final digital piece and then you manipulated it, well then as a professor I’d say, ‘Hey, that’s being creative,’” said Almon.

Even though these AI systems have the ability to produce realistic images, Almon notes that these models tend to struggle to emulate the work of an actual person, especially in regard to medical illustrations.

“So what I do as a medical illustrator has to be super accurate because a doctor or a patient has to learn from it. I gave the AI a test and said, ‘Create me an anatomically correct heart that can teach a patient about blood flow.’ The hearts that I got back were things like heart balloons. Basically, I got something that wasn’t an anatomically correct heart… So for medical accuracy and science accuracy, it doesn’t understand,” said Almon.

Recent graduate of Rowan University Cole Milatro took Almon’s 3D modeling class last semester and echoed similar sentiments when it comes to properly using AI.

“I see it as a tool for helping you get pointed in the right direction but not as an answer,” said Milatro.

Professors, for the most part, do not mind students using these programs to assist themselves. All they ask in return is transparency on the part of the student.

“They want to use it to cheat in class right? As a professor, I’ll say, show me how you use it like, show me within Photoshop, show me transparently… What did you take? What did you cite?… And how did you work with it to make something better?” Almon said. “So if students just sort of show me the final image and they can’t show me the process behind that image… then I’ll be like, ‘Hey, that’s cheating.'”

The issue of cheating runs alongside art plagiarism issues.

“If an artist is using artificial intelligence-assisted image generation programs, it is important for them to say so. I do think that is something that is important, that sort of transparency… I do think ethically, it’s reasonable,” said Shores.

The existence of AI is ambivalent with plenty of negative and positive traits associated with it based on your viewpoint. One thing is certain though; AI is here and isn’t going anywhere.

For comments/questions about this story tweet @TheWhitOnline or email [email protected]

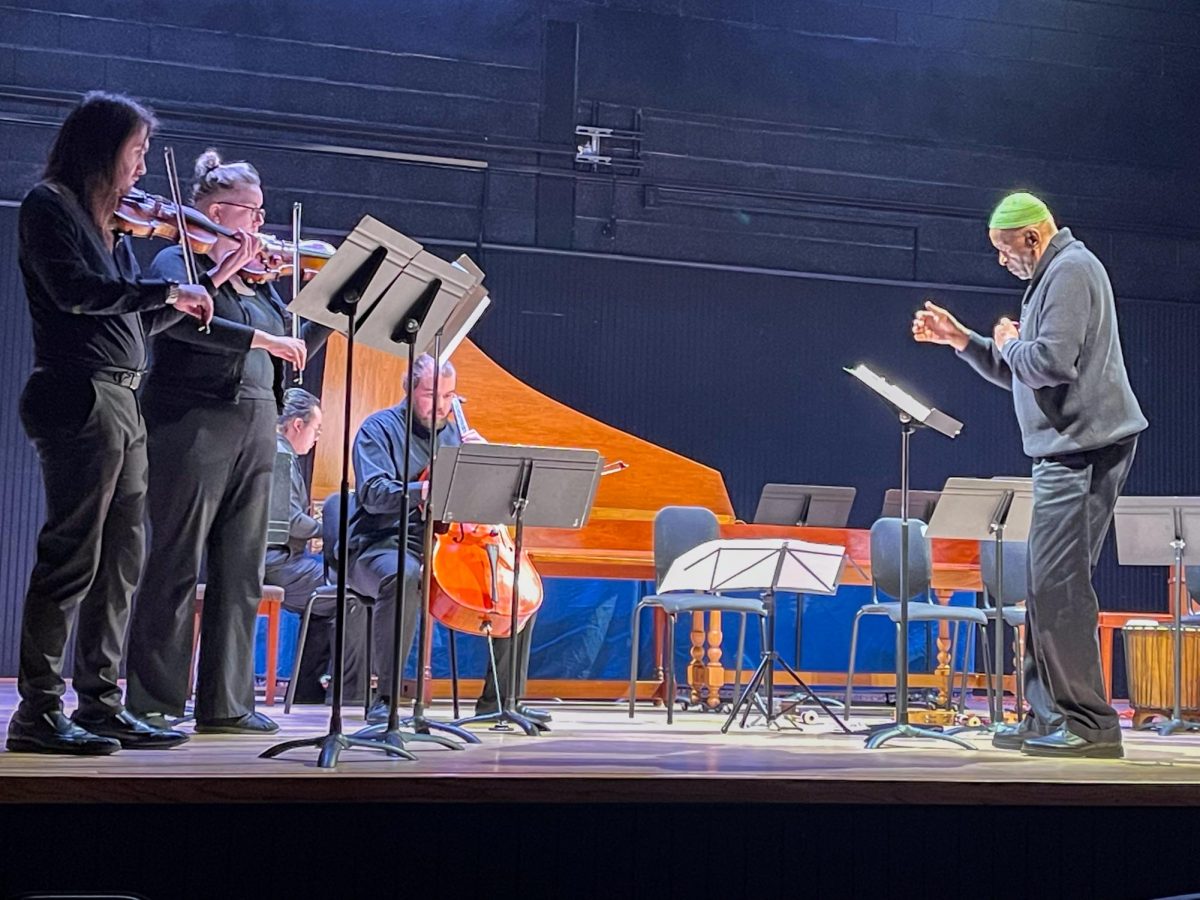

!["Working with [Dr. Lynch] is always a learning experience for me. She is a treasure,” said Thomas. - Staff Writer / Kacie Scibilia](https://thewhitonline.com/wp-content/uploads/2025/04/choir-1-1200x694.jpg)