Artificial Intelligence, now commonly known as AI, is here to stay. As students, this new world doesn’t seem so hard to navigate — it can make school easier for us by organizing our lives and thoughts, outlining, as well as completing papers and other projects. The use of generative AI, like OpenAI’s ChatGPT or Google’s Bard, is contentious.

Official guidance from Rowan leaves it up to the department or professors whether it’s allowed to be used. Even if professors allow it, work from AI must always be cited, and if used liberally or without citation, it can be considered plagiarism.

With schools like Rowan taking stances on AI, this reaction begs the question: is AI a tool that helps us become better students? Or is it hindering us?

Generative AI in its current form hasn’t been around for too long, with ChatGPT launching only in 2022. ChatGPT, Bard, and other platforms are based on Large Language Models (LLMs), which are trained on mountains of texts in order to learn language (grammar, semantics), as well as being able to generate answers to questions and respond to human input.

It’s important to note that ChatGPT and its contemporaries are still very new. While they are powerful language models, learn information constantly, and are being tweaked by their companies to be more efficient and accurate, LLMs are prone to issues of bias and accuracy (many have had issues recently with ChatGPT being unable to count the “r”s in “strawberry”).

Problems aside, schools like Rowan are honing in on the increasing power for Generative AI models to aid academic dishonesty. While surprisingly not a prevalent issue, according to Turnitin an arguably problematic software that aims to detect AI writing, students can use it to brainstorm, craft, or write papers or other assignments.

This sounds scary, but since the issues are so new, there’s not a lot of published research on the topic. However, search engines like Google have been subject to similar debate.

Google and other search engines came onto the scene early in the Internet’s existence, being founded in 1998. The concern is, with the world at one’s fingertips, and information easier to access than ever before, is critical thinking lost in the crossfire?

Nicholas Carr’s 2008 Atlantic article, “Is Google Making Us Stupid?” and subsequent writings argued that the way we read and process information is changing for the worse.

Carr quotes Maryanne Wolf, a literacy researcher, who writes that “we are not only what we read. We are how we read.”

He also cites anecdotes of how he and others can no longer read long articles let alone books. The argument seems sound, when we don’t read long materials and think critically, we will lose out on necessary skills.

The goal of Carr’s article was to help us understand how the Internet can become an issue. Some people, like the National Institute for Technology and Liberal Education director Bryan Alexander, find the article and the arguments folly. The Internet, has overall, been a helpful tool. The key, to quote Maryanne Wolf once more, is “how we read.”

Much like Google, the problem with AI is not what we take from it that makes us dumb. In that respect, would it be wrong to say that asking a person a question and receiving an answer is making us either smart or dumb?

Even human experts don’t get it all right, so why would ChatGPT? Sure, getting the amount of “r”s in “strawberry” wrong is embarrassing, to say the least, but I’ve been told how to spell words wrong before from the people around me. There are plenty of biased or factually incorrect texts that were published like any other. Not one person or program is infallible, and that is key to understanding what ChatGPT or Google can be.

The answer is critical thinking, of course. Taking any quote, writer, or program at face value (even this piece) is grossly misguided.

Teacher Kimberly Mawhiney, was concerned about Google’s effects on critical thinking. If her students could google the answer to any question, then how can they learn anything?

Mawhiney writes, “I put the accountability back into the students hands by asking the student questions that would lead her to the answers.”

AI won’t make us dumb, unless we use it willy-nilly to write essays and answer basic questions. The accountability is not on LLMs or search engines to make us smart or dumb, but on how students and professors use it and critically evaluate the answers they’re given.

AI can be scary for many reasons, but if we take control into our own hands, critical thinking, discussion, and learning won’t be one of them.

For comments/questions about this story DM us on Instagram @thewhitatrowan or email [email protected]

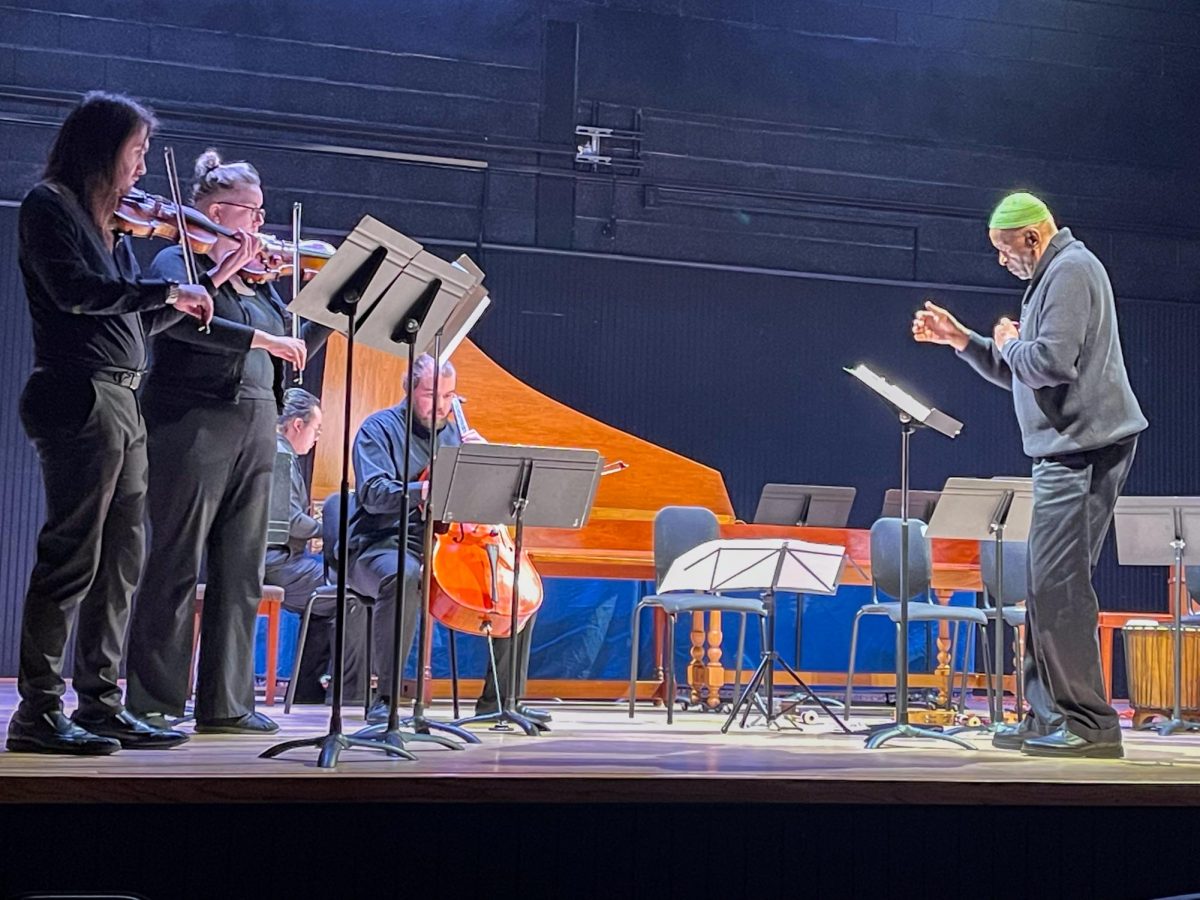

!["Working with [Dr. Lynch] is always a learning experience for me. She is a treasure,” said Thomas. - Staff Writer / Kacie Scibilia](https://thewhitonline.com/wp-content/uploads/2025/04/choir-1-1200x694.jpg)